Artificial intelligence and machine learning are terms which have been thrown around a lot in the tech industry over the last few years, but what exactly do they mean? Anyone vaguely familiar with sci-fi tropes will probably have an idea about AI, though they may view it as a little more sinister than what's around today.

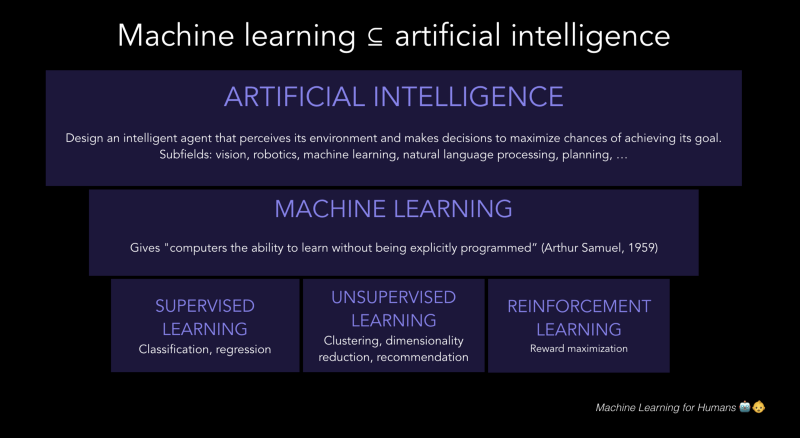

The two terms are often conflated and, incorrectly, used interchangeably, particularly by marketing departments that want to make their technology sound sophisticated. In fact, artificial intelligence and machine learning are very different things, with very different implications for what computers can do and how they interact with us.

It starts with Neural Networks

Machine learning is the computing paradigm that's lead to the growth of "Big Data" and AI. It's based on the development of neural networks and deep learning. Typically this is described as imitating the way humans learn, but that's a bit of a misnomer. Machine learning actually relates to statistical analysis and iterative learning.

The nature of any particular neural network can be very complicated, but the key to the way they function is by applying weights (or factors of importance) to some attribute of the input. Using networks of various weights and layers, it's possible to produce a probability or estimation that your input matches one or more of the defined outputs.

The problem with this type of computing, just like regular programming, is its dependence on how the human programmer sets it up, and readjusting all these weights to refine the output accuracy could take too many man-hours to be feasible. A neural network transitions into the realm of machine learning once a corrective feedback loop is introduced.

Phones with a Neural Processing Unit like the Huawei Mate 10 Pro can distinguish between flowers and other plants in the camera app

Enter Machine Learning

By monitoring the output, comparing it to the input, and gradually tweaking neuron weights, a network can train itself to improve accuracy. The important part here is that a machine learning algorithm is capable of learning and acting without programmers specifying every possibility within the data set. You don't have to pre-define all the possible ways a flower can look for a machine learning algorithm to figure out what a flower looks like.

Stanford University defines machine learning as "the science of getting computers to act without being explicitly programmed".

Training a network can be done in a number of different ways, but all involve a brute force iterative approach to maximising output accuracy and training the optimum paths through the network. However, this self training is still a more efficient process than optimizing an algorithm by hand, and it enables algorithms to shift and sort through much larger quantities of data in much faster times than would otherwise be possible.

Once trained, a machine learning algorithm is capable of sorting brand new inputs through the network with great speed and accuracy in real time. This makes it an essential technology for computer vision, voice recognition, language processing, and also scientific research projects. Neural networks are currently the most popular way to do Deep Learning, but there are other ways to achieve machine learning as well, although the method described above is currently the best we have. You can read more about how machine learning works here.

What AI is and isn't

Machine learning is a clever processing technique, but it doesn't possess any real intelligence. An algorithm doesn't have to understand exactly why it self-corrects, only how it can be more accurate in the future. However, once the algorithm has learned, it can be used in systems that actually appear to possess intelligence. A good way to define artificial intelligence would be the application of machine learning that interacts with or imitates humans in a convincingly intelligent way.

A machine learning algorithm that can sift through a database of images and identify the main object in the picture doesn't really seem intelligent, because it's not applying that information in a human-like way. Implementing the same algorithm in a system with cameras and speakers, which can detect objects placed in front of it and speak back the name in real time suddenly seems much more intelligent. Even more so if it was able to tell the difference between healthy and unhealthy foods, or differentiate everyday objects from weapons.

A good definition of AI is a machine that can perform tasks characteristic of human intelligence, such as learning, planning, and decision making.

Artificial intelligences can be broken down into two major groups, applied or general. Applied artificial intelligence is much more feasible right now. It's tied more closely to the machine learning examples above and designed to perform specific tasks. This could be trading stocks, traffic management in a smart city, or helping to diagnose patients. The task or area of intelligence is limited, but there's still scope for applied learning to improve the AI's performance.

General artificial intelligence is, as the name implies, broader and more capable. It's able to handle a wider range of tasks, understand pretty much any data set, and therefore appears to think more broadly, just like humans. General AI would theoretically be able to learn outside of its original knowledge set, potentially leading to runaway growth in its abilities. Interestingly enough, the first machine learning discoveries reflected ideas of how the brain develops and people learn.

Machine learning, as part of a bigger complex system, is essential to achieving software and machines capable of performing tasks characteristic of and comparable to human intelligence — very much the definition of AI.

Now and into the future

Despite all the marketing jargon and technical talk, both machine learning and artificial intelligence applications are already here. We are still some way off from living alongside general AI, but if you've been using Google Assistant or Amazon Alexa, you're already interacting with a form of applied AI. Machine learning used for language processing is one of the key enablers of today's smart devices, though they certainly aren't intelligent enough to answer all your questions.

The smart home is just the latest use case. Machine learning has been employed in the realm of big data for a while now, and these use cases are increasingly encroaching into AI territory as well. Google uses it for its search engine tools. Facebook uses it for advertising optimization. Your bank probably uses it for fraud prevention.

There's a big difference between machine learning and artificial intelligence, though the former is a very important component of the latter. We'll almost certainly continue to hear plenty of talk about both throughout 2018 and beyond.

from Android Authority http://ift.tt/2Gxz3hB

via IFTTT

No comments:

Post a Comment